In an era where infrastructure is code, some aspects of networking still feel like a black box? Proprietary networking platforms, such as Cisco’s IOS, Juniper’s Junos, etc have controlled the switch market. That closed grip on hardware and software created real vendor lock-in and inflated costs. I see Arista making a play with a Linux-based system, but let’s be honest, it still relies on their hardware. This changes when Cumulus Linux and the wider open-source networking ecosystem enter the chat. It’s a powerful approach that shatters the proprietary pattern, giving you freedom and control over your infrastructure.

The Linux Revolution in Networking

At its core, Cumulus Linux is a powerful, networking-focused operating system based on Debian, designed specifically for network switches. Instead of a black box with a proprietary CLI, you get a full-fledged Linux distribution running on your network hardware. This isn’t just a fancy shell; it’s the actual Linux kernel doing the heavy lifting, allowing you to treat your switches like any other Linux server. Consequently, your existing Linux scripting skills become your new network security and automation superpowers, drastically shrinking the learning curve.

- Familiarity and Skill Transfer: Since you already have a decent grasp of Linux commands, Cumulus leverages that knowledge immediately.

- Automation at Scale: Because it’s Linux, you can use standard automation tools like Ansible, Puppet, or simple Python scripts. Imagine deploying an entire data centre fabric with a few lines of code, replacing hours of manual CLI input. Now that’s the definition of efficiency.

- Flexibility and Customisation: You are no longer limited by vendor choice. With Cumulus, you install any Debian package, run custom applications, and tailor your network exactly to your needs. This freedom is crucial for cutting-edge cloud security integration.

Open Networking and ONIE: The Unlocked Hardware

The concept of Open Networking is fundamental to Cumulus. It’s about breaking free from vendor lock-in by separating the network hardware from the network operating system. This disaggregation empowers you to choose the best-of-breed components for your infrastructure.

A critical enabler of open networking is the Open Network Install Environment (ONIE). Think of ONIE as the BIOS or bootloader for bare-metal switches. It’s a small Linux-based environment that comes pre-installed on ONIE-compliant switches. Essentially, it allows you to install any compatible network operating system onto the switch, just like you would install an OS on a server. This key move streamlines provisioning and helps reduce your hardware inventory complexity.

Navigating with Precision: The NVUE Object Model

While Cumulus Linux hands you the raw power of a standard Linux CLI, it also introduces a modern, object-oriented configuration framework called the NVIDIA User Experience (NVUE). NVUE is the declarative control plane and API for your Cumulus Linux switch. Instead of manually editing flat files or executing step-by-step commands, NVUE centralises the entire configuration into a logical, structured object model, making the device inherently automation-friendly. This modern framework replaces the older net commands (NCLU), shifting the entire philosophy from a simple CLI to a true, declarative object model.

- You simply define the desired state for objects like

interfaceorrouter bgp. - NVUE then intelligently applies the necessary changes to achieve that state, making configurations robust and far less prone to human error.

This inherent API-first design supports the CRUDX (Create, Retrieve, Update, Delete, eXecute) concept that’s central to modern API interactions, making automation seamless and predictable.

The Art of the Data Centre: Understanding Clos Architecture

Before we get our hands dirty with the lab, let’s look at the big picture. The modern data centre demands a new topology because applications now generate a flood of east-west traffic. Therefore, the two-tier Clos architecture, also known as spine-leaf, is essential.

This design is brilliant in its simplicity:

- Spine Layer: Provides high-speed connectivity between leaf switches.

- Leaf Layer: Connects directly to the servers.

Crucially, every leaf connects to every spine, ensuring that all traffic travels the same number of hops. This predictable path guarantees low, consistent latency. Since we use routing protocols like BGP in a pure Layer 3 fabric, we achieve active-active forwarding and superb scale without the nightmares of classic STP convergence.

Your Lab, Your Playground: GNS3 Setup

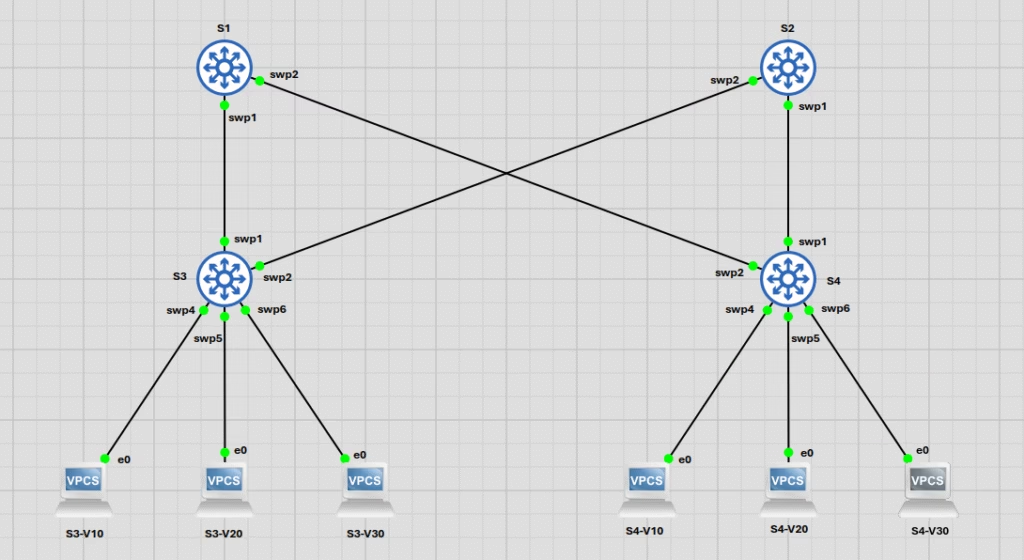

To truly grasp Cumulus Linux open networking configuration, hands-on experience is paramount. We use GNS3 for risk-free experimentation. Our practice topology is a two-tier spine-leaf: S1 and S2 are the spines, and S3 and S4 are the leaves.

Our Practice Topology: A Two-Tier Clos in Action

The connections are specific:

- S3 connects to S1 (

swp1) and S2 (swp2). - S4 connects to S1 (

swp1) and S2 (swp2). - Endpoints (V10, V20, V30) connect to S3/S4 on

swp4,swp5, andswp6.

First Steps: Getting Our Hands Dirty

First, set your hostnames (e.g., nv set system hostname S1). The interfaces in Cumulus are named swp (switch port), followed by a number (e.g., swp1). After any change, always run nv config apply to push your changes to the active configuration, and then nv config save to write those changes to persistent memory. This two-step handshake is non-negotiable for engineers who value persistence.

VLAN Trunk & Access Port Configuration

In the Linux network world, Layer 2 operations like VLAN tagging are handled by a bridge, which acts like a virtual switch within the kernel. Unlike proprietary OSes, where the switch ASIC handles VLANs exclusively, Cumulus exposes this Linux abstraction. We will use the default VLAN-aware bridge, br_default.

Configuration on S3 (and identically on S4):

We assign the interfaces to the br_default domain:

nv set interface swp1-2,4-6 bridge domain br_default

Then, configure the VLANs allowed on this domain (10, 20, 30):

nv set bridge domain br_default vlan 10,20,30Finally, set the access ports to their respective VLANs:

nv set interface swp5 bridge domain br_default access 10

nv set interface swp4 bridge domain br_default access 20

nv set interface swp6 bridge domain br_default access 30

Configuring the Spine Switches: S1 and S2

The spines must be configured as trunks to carry traffic for all required VLANs.

S1 and S2 Trunk Ports:

nv set bridge domain br_default vlan 10,20,30

nv set interface swp1-2 bridge domain br_defaultSpanning Tree Protocol (STP): Keeping Loops at Bay

Frankly, in any Layer 2 network with redundant paths, a broadcast storm will bring everything to a halt, reminiscent of Lagos traffic during rush hour. STP prevents this. Cumulus defaults to the faster RSTP (802.1W).

To explicitly define our network’s behaviour, we configure the STP priority. Therefore, we ensure S1 (lower priority) is our root bridge and S2 is our secondary root bridge.

On S1, set the STP priority:

nv set bridge domain br_default stp priority 24576On S2, set the STP priority:

nv set bridge domain br_default stp priority 28672Enabling STP Port Admin Edge and BPDU Guard

When dealing with access ports connected to end devices, such as servers, you don’t expect them to create a Layer 2 loop. In fact, we want these ports to transition to a forwarding state instantly. This is where STP Port Admin Edge comes in, which is the Cumulus equivalent of Cisco’s PortFast feature.

However, always remember the principle of defence-in-depth: if a switch is accidentally connected to an access port, it could create a dangerous loop. That’s why you must enable BPDU Guard as a critical network security layer.

On S3 and S4, use the following commands for the access ports (swp4-6):

nv set bridge domain br_default stp state up

nv set interface swp4-6 bridge domain br_default stp admin-edge on

nv set interface swp4-6 bridge domain br_default stp bpdu-guard onVerifying Your Configuration

Before verifying, remember to apply and save your changes: nv config apply and nv config save.

After configuring STP, it’s always a good idea to verify the changes. While nv config showis the go-to for seeing the entire running configuration (just like show run), you can use more surgical commands for specific checks. These nv show commands are invaluable:

nv show bridge domain br_default: Gives a high-level summary of the bridge, its ports, and VLANs.nv show interface: Shows the status of all interfaces on the switch.nv show interface swp1: Dives deep into a single port, showing its bridge, VLAN, and STP details.

To view the overall STP state for the bridge domain:

nv show bridge domain br_default stp state

nv show bridge domain br_default stpTo see the STP state for a specific interface, like the trunk port swp1:

nv show interface swp1 bridge domain br_default stpFinally, to get a comprehensive view of all your running configurations, use the powerful nv config show command.

Verifying the L2 Fabric: Does It Ping?

Configuration is one thing; data plane verification is the moment of truth. Let’s prove our L2 fabric works as expected.

1. Configure VPC IPs (Confirmed)

We will use the confirmed IP addresses for our virtual PCs, ensuring they are all in the correct subnet for their respective VLANs.

- VLAN 10 (Subnet 192.168.10.0/24):

- On

S3-V10:ip 192.168.10.100/24 - On

S4-V10:ip 192.168.10.101/24

- On

- VLAN 20 (Subnet 192.168.20.0/24):

- On

S3-V20:ip 192.168.20.100/24 - On

S4-V20:ip 192.168.20.101/24

- On

- VLAN 30 (Subnet 192.168.30.0/24):

- On

S3-V30:ip 192.168.30.100/24 - On

S4-V30:ip 192.168.30.101/24

- On

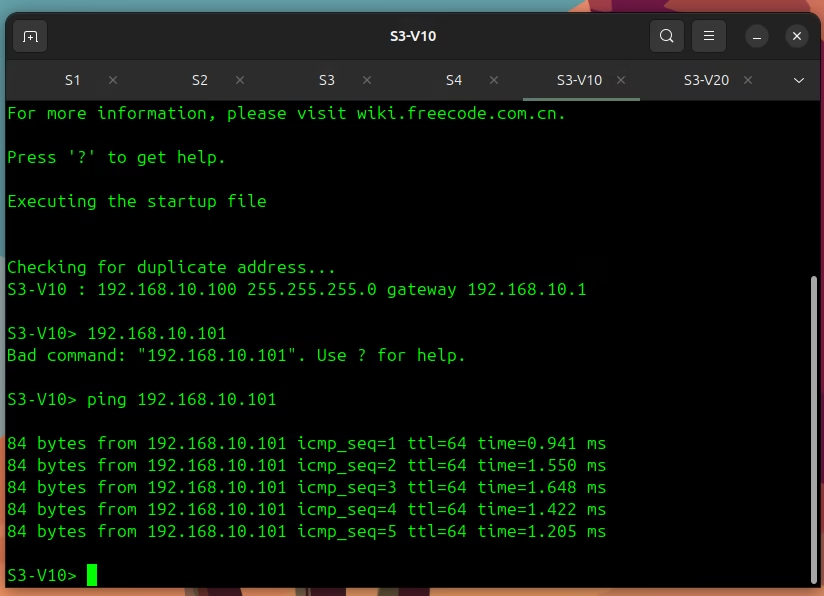

2. Test 1: Intra-VLAN Connectivity (Success)

Let’s test connectivity between two hosts in the same VLAN but on different switches. This confirms that our VLAN-aware bridges and trunks are functioning correctly across the fabric.

From S3-V10, ping S4-V10: ping 192.168.10.101

As you can see, the ping is successful. The packet travels from S3-V10 up to S3, across the trunk to a spine (S1 or S2), down to S4, and finally to S4-V10. Our L2 fabric is alive.

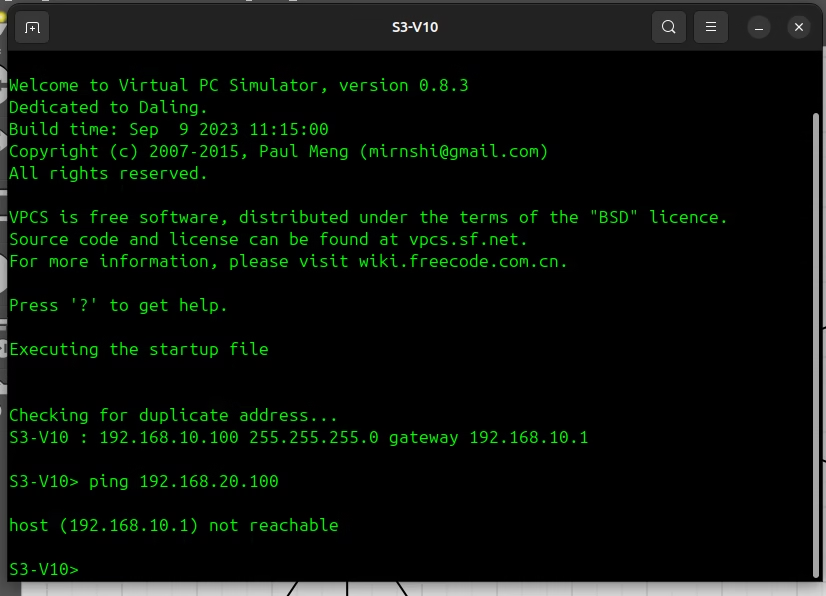

3. Test 2: Inter-VLAN Segmentation (Failure)

Now, let’s test our segmentation. A ping between two different VLANs (without a router) must fail. This proves our VLANs are properly isolated.

From S3-V10, ping S3-V20: ping 192.168.20.100

This ping fails as expected. S3-V10 is in VLAN 10, and S3-V20 is in VLAN 20. The switch correctly prevents traffic from crossing these L2 boundaries.

Conclusion: L2 Foundation Secured, L3 Awaits

This is where we’ll pause for now. You’ve successfully built and secured the crucial Layer 2 underpinnings of your modern data centre fabric. From assigning VLANs using the powerful NVUE object model to proactively preventing broadcast storms with an explicitly configured and protected STP topology, you have the necessary foundation.

But this is just the beginning. The real power of this Clos architecture is unleashed at Layer 3.

In Part 2 of this series, we will build on this foundation. The logical next step for this fabric is to move to Layer 3 and configure the routing protocols that make a Clos architecture so powerful. We’ll explore how to build a true, high-performance L3 fabric using BGP.

In a future article, we will tackle other critical data centre concepts, such as MLAG and Bonding, to build highly available connections to our endpoints.

Leave a Reply